How to Livestream a Shred Legend: Michael Angelo Batio 2017 Live Interview

Three 4K cameras, a video-enabled smartphone, three microphones, one guitar, one blaring amplifier, and one blazing shred legend streaming a television-quality video and audio broadcast to a 70,000-person internet audience through a single turbocharged iMac? Sure, no problem. Would you like fries with that?

A few weeks ago, the Cracking the Code team sat down for a thrilling and by turns terrifying livestream with the amazing Michael Angelo Batio.

We say terrifying, not just because Batio’s famous blade-like precision has lost none of its cutting edge after all these years, but also thanks to the nerve wracking panic of capturing it all as a live broadcast right as it happened.

Here’s how we did it.

Roll Camera

As with microphones, preamps, and converters in audio recording, the resulting video quality of any live broadcast will depend greatly on the quality of the video signal you feed it. For our master studio shot, we used one of the best of the current generation of full-frame, 4K-capable mirrorless cameras, the Sony A7S II:

For the closeup shots, we employed two Panasonic GH4s, a smaller “micro four-thirds” sensor camera that was one of the first to bring a 4K image and video-centric feature set to the prosumer market. Though it was introduced more than three years ago, the GH4 still delivers what you’d consider a cutting-edge picture when it is properly exposed, and particularly when its 4K output is downscaled to a more broadcast-friendly 1080p HD, as we did for our livestream:

In fact, despite their branding as “HD” cameras, previous generation DSLRs like the ubiquitous Canon 5D and Rebel lines don’t actually deliver 1080 HD-levels of detail, but rather something closer to 720 vertical lines of resolution. The more detailed 1080 version of the HD format is referred to euphemistically as “full HD” in videography parlance, presumably to leave some marketing wiggle room for the 720 format to share the HD moniker.

And while 720 is indeed greater than the 480 vertical lines of SD television and DVDs, and thus, still defensibly “high-definition”, the difference between consumer 720 and professional 1080 will be obvious to even a casual observer who compares their weekend soccer practice footage with the hyper-detailed look of the typical high-definition sports broadcast.

Television sports are still, for the most part, Full HD. They are also occasionally Full HD broadcast as 720, further complicating comparisons, but nevertheless preserving some of the hyper-detailed look of the original footage through intelligent downscaling algorithms.

It’s just that consumer cameras, regardless of format, are often neither. Even held steady on a tripod and filming a static subject, DSLRs like the Rebel are lucky to deliver 720 lines of actual resolution, regardless of the number of vertical lines in the files they record. Their smudgy image may be flattering for closeups, but often fail to deliver that “you are there” feeling on detailed wide shots like you’ll get from an NFL broadcast.

Capture Big, Deliver Small

Suffice it to say that not all HD is created equal. In fact, you may be surprised to learn how it is created in the first place. Many of the best HD cameras, like the much-vaunted Arri Alexa used nearly ubiquitously on Hollywood and television productions, benefit from capturing images with greater-than-HD levels of detail. Even Canon’s own excellent C300 professional HD camera, which was released back in 2011, utilized a sensor which captured 4K for certain colors, and then strategically reduced that resolution later on in the image processing pipeline.

For various technical reasons better explained by an electrical engineer than a guitar player, this process produces an image which is generally more detailed than that produced by HD cameras which employ only HD-capable image sensors. It also reduces noise and increases color bit-depth at the same time. All of this assists in producing that surreal sense of “looking through a window” clarity that the best modern video cameras offer.

From an audio perspective, you can think of this a little like the difference between recording in 44.1khz versus 96khz. Various technical processes, like the low-pass filtering necessary to prevent frequency aliasing, make for arguably more natural treble rolloff in a 96khz pipeline than even the best 44.1khz pipeline. In fact, video cameras often employ low-pass filters for precisely the same reason, to prevent fine details in the scene, such as the fabric weaves in clothing and upholstery, from producing false patterns and color in the resulting image. The blurring effect of these filters will be less noticeable when reducing a larger image to a smaller one for final delivery.

Similarly, even in the CD era, recording 24 bits and delivering 16 bits was the easiest way to optimize the quality of the final mix. A select few of your listeners in 2007 might have been able to play back 24-bit resolution files. But even if your audience listened primarily on consumer-grade 16-bit equipment, most of us in audio production moved to 24 bits for reasons of gain staging, dynamic range, and noise floor, and never looked back.

To 4K or not to 4K?

The bottom line is that capturing in 4K and delivering in HD produces an image which beats the pants off HD images from even a few years ago. Ironically, the converse is also true. Televisions are rapidly becoming 4K-capable, and at reasonable price points to boot. But an HD image which truly approaches 1,080 lines of vertical resolution will still look amazing to most viewers, even after upscaling by the television itself to fill its 4K real estate.

The reason is that there is only so much detail the human eye can perceive while sitting a given distance away from a video display. And while video professionals often debate what that actual figure is, it’s clear that we’re getting closer to it, for less money, than ever before.

Compute!

But what do you plug those cameras into? Our studio is powered by a late-generation Retina 5K iMac with a quad-core 4ghz i7 processor, a maxed out video card, and a terabyte of internal SSD storage. If nothing else, the slim configuration options offered by Apple make this kind of decision-making easy. In our experience on the bleeding edge of audio and video production, your dollar goes the farthest when maxing out the configurator on Apple’s website and hitting the “Add to Bag” button.

The good news is that even this future-proofing approach toward hardware purchases will not necessarily break the bank. We adopted the above machine from foster care at Apple, as an officially refurbished item. In other words, the machine arrived fully warrantied by Apple itself, but sneaking in just under the $3K mark. This was a savings of several hundred bucks compared to its closed-box brethren.

Interfacing the Music

So we already knew what kind of compute power we were working with. The question was how to address it.

And the answer, again, proved simple by virtue of the scarcity of available options. Multi-channel audio interfaces are the norm in recording studios, but there are relatively few off-the-shelf devices capable of capturing multiple HD video signals simultaneously, and even fewer that can handle multiple 4K video signals. So the bulk of the prosumer market is single-channel interfaces connected by either Thunderbolt or USB.

Of those, Magewell’s USB line of HDMI video capture interfaces are the most plug-and-play choices as of this writing, requiring no drivers on either Windows or Mac, and accepting a wide range of resolutions and frame rates. In fact, despite their tiny physical size and convenient reliance on USB bus power (look Mom no plugs!), the Magewell devices do all their downscaling internally, delivering to the host computer a ready-made 1080p image that requires no extra effort from the CPU or GPU.

Going Live

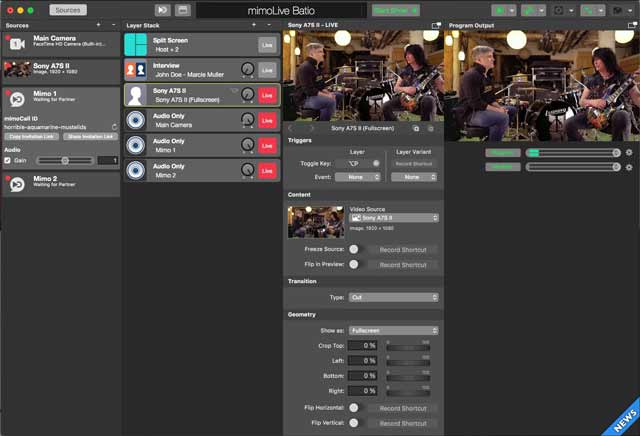

Now that our video signals are inside the computer, we need a way of switching between them and shipping them on their way to the intertubes. After several months of testing broadcast applications, we settled on Mimolive from Boinx Software:

For musicians considering a livestreaming setup for performance or teaching, it’s worth a serious look. At a few hundred bucks a year subscription, Mimolive’s feature set simply cannot be equaled for less than thousands of dollars worth of business-class broadcast hardware and software. And its ease of use, considering the number of features it allows a single operator on a single machine to perform, doesn’t exist at any price point that we are aware of.

Specifically, Mimolive functions like a DAW for video, accepting camera inputs, switching between them or mixing them in artful ways, and streaming the resulting video output to the platform of your choice, be that YouTube, Facebook, or otherwise. This much can be done with any of a number of leading broadcast applications. Telestream’s Wirecast is a popular choice, and the one to which we directly compared Mimolive.

Even though the output of both Mimolive and Wirecast is determined in large part, again, by the video signals you feed them, Mimolive’s image output seemed notably more detailed. We can’t pinpoint precisely why that is. Both applications leverage hardware-accelerated H264 encoders on the GPU for image processing, so we can’t say why one application would produce a more detailed HD frame than another. But what we can say is that we consistently preferred the Mimolive image to the Wirecast image in our tests.

Hunting the Skype-A-Saurus

But we didn’t just want to film the studio with lots and lots of pixels. We also wanted to take live calls from viewers. In fact, due to its nefarious complexity, the challenge of cobbling together all the hardware and software necessary to do this via Skype has infamously been dubbed “building a Skype-a-Saurus” by the video production community.

Our initial attempts at solving the call-in problem involved running both Wirecast and Skype on the same machine, and feeding the output of one to the other in the form of a virtual video camera feed. This is a built-in facility that Wirecast offers precisely for this purpose, i.e. feeding its master video output to other applications where it will appear like a webcam input.

But this process ate up precious CPU cycles, and still left us at the mercy of Skype’s draconian compression algorithms. As such, we were never able to get better than choppy frame rates, macro-blocky video, and low-bitrate, “underwater”-sounding audio. Aggressive compression on audio signals might be fine for the next time you discuss the plummeting figures at your quarterly sales meeting. But for musical sounds it’s positively torture to listen to. Do you want your livestream to sound like a 128kbps Napster mp3 circa the year 2000? Try a Skype call for truly retro musical audio quality.

Feeding the Skype video into Wirecast was equally dreadful, requiring an active screen capture of the Skype portion of the desktop display, eating up even more CPU. And woe betide he who accidentally moves the PornHub window in front of the Skype window. (Not that I would ever do such a thing.) That this worked at all, even with copious dropped frames on the broadcast output, was at least a rousing testament to Moore’s Law.

Upgrading to Business Class

Microsoft, the current owner of Skype, does offer a business-class solution for all this called Skype TX, through which an external hardware box costing upwards of four or five thousand dollars takes all the Skype calls, and outputs those video feeds back to the broadcast machine.

Not only does this external box require its own operator, but those video feeds then become additional “cameras” in your video switching setup, requiring their own Magewell-type video interfaces. And those callers will also need their own audio monitoring “mix-minus” feeds so they can hear what the hosts are talking about without also hearing themselves.

All that being said, removing Skype from the broadcast machine also removes its CPU hit and potentially increases its frame rate and overall video quality. So… we took the hint and tried something similar.

Divide and Conquer

We put Skype on another machine, a late-generation Mac Mini. We captured its screen with a Magewell and fed it into the broadcast machine like a camera. We also ran Wirecast’s master output right out of the broadcast machine entirely, through a Blackmagic Intensity Shuttle interface, and then back into yet another Magewell device on the Mini. On the Mini, we selected the input Magewell as the camera in Skype, so the caller could see what was going on in the studio.

On the audio side, we created a setup in Logic Pro X utilizing aux sends to multiple outputs to generate mix-minuses for the hosts and caller, along with a more complete mix of all the parties for Wirecast itself:

This type of complex routing is similar to what an audio engineer would create on a standalone hardware mixer in a live television broadcast studio. The difference here is that we’re running it all in software on the broadcast machine itself. Amazing times we live in.

Then, just like we sent the master video feed to the Skype machine, we also sent the mix-minus audio for the lone Skype caller back to that same machine, this time via a stereo 1/8″ cable out of the Retina iMac’s built-in audio port. Now the Skype caller could hear everyone except themselves. The rest of the audio mixes were fed through a pair of virtual buses straight from Logic into Wirecast, without leaving the computer, using Rogue Amoeba’s clever LoopBack audio routing tool.

Short story, it worked. It was crazy complex. It was expensive. Not only did this require two computers and three additional interfaces (two Magewells and a Blackmagic), it also required a Wirecast upgrade costing several hundred bucks to a “pro” feature that would allow Blackmagic video output in the first place.

And after all this, the video and audio quality was still poor because, well, Skype. Needless to say, we didn’t even bother with the Skype TX hardware because I didn’t want to sell my car, and I still need my kidneys for future binge drinking episodes.

Mimo Can You Hear Me?

Enter Mimolive’s “Mimocall” feature to the rescue. Mimocall is a built-in feature of the Mimolive broadcast application which automatically routes incoming video calls through Boinx’s proprietary Skype-style servers, straight to your broadcast machine, over the internet. You read that correctly: built-in. I’ll type that again to emphasize the absurdity of this: built-in. Here’s what it looks like:

The video feed from a viewer’s webcam hits their Chrome browser, then Boinx’s servers, and is then routed directly into your Mimolive broadcast machine as a camera source. This happens over the internet, with no additional video hardware necessary on your part for the broadcast machine. It’s just there, appearing on your screen like any of your physically connected cameras. Not only that, but you can have multiple of these. Two web callers? No problem. Two more camera feeds on your screen. Arrange them however you like, including various split-screen and full-screen options.

As you do this, Mimolive automatically generates the mix-minus audio monitoring feeds back to the callers, so they hear everyone except themselves. You will never need to pull up an audio mixer window for this. It “just works”, a mantra that is often repeated yet rarely delivered.

In other words, Mimocall is a way to incorporate live video calling from multiple viewers, either one at a time or simultaneously, on a single broadcast machine, with the lowest-possible compute power and simplest possible interface of any solution we were able to devise on our own, for any dollar amount. And the Mimolive application itself is software we didn’t even know existed until a month before our go-live date with Batio. I just stumbled across a forum discussion somewhere, and kept clicking until I got to their web site.

The Boinx developers have built something with Mimocall which, to our knowledge, exists nowhere else. They are poised to take over the small broadcaster market if they can drive awareness of what Mimolive and Mimocall together can do.

At the moment, Mimocall is a free service while it’s still in public beta, though I suspect they will eventually figure out how to charge a few bucks for it when the feature set has stabilized. And if you teach or broadcast to the internet for a living, I suspect you’ll gladly pay those few bucks, as we would, to interface with our viewers in such a simple and high-quality fashion.

Disclaimer: Please note that we at Cracking the Code have no affiliation with Boinx Software other than as paying users of their product.

Ready to Rock

If this sounds like a lot of moving parts, we can only be thankful that the preparedness of our esteemed guest was not one of them:

Despite numerous Cape Kennedy-style dress rehearsals of exactly this scenario, when the day of the broadcast arrived, YouTube’s servers refused to respond. Buttons were mashed. Necks dampened. After about 20 minutes of fat-fingered fumbling in the YouTube dashboard, the live event was deleted and recreated. This effectively stranded hundreds of viewers at an old URL waiting for a broadcast which, like Godot, would never arrive.

As the hopeful waited silently out there in the blackness, the broadcast light on our screen finally went red. We swiveled from our keyboards to Batio, seated behind us, shredding a furious acoustic credenza with the volume knob spun down, and asked if he was warmed up. He looked up from his fretboard, incredulous:

“I’m ready to rock!”

Luckily, we already were. Hit “play” on the video above to hear him say it!

Troy Grady is the creator of Cracking the Code, a documentary series with a unique analytical approach to understanding guitar technique. Melding archival footage, in-depth interviews, painstakingly crafted animation and custom soundtrack, it’s a pop-science investigation of an age-old mystery: Why are some players seemingly super-powered?

Source: www.guitarworld.com